Week 0

- A reference to all symbols necessary for the notes Linear Algebra Symbols

- A list of Problems associated with the Notes 471-ProblemList

- A series of unanswered questions about Linear Algebra 471-QuestionBank

- References to Category theory Category Theory For Babies Category Theory

- Tikz Testing Ground 471-ImagesTest 6) 471-Homework

Coordinate Maps

Given a Vector Space V over F, and a basis , a coordinate map is the following isomorphism:

Where

Week 1

Lecture 1 : Systems of Equations

Solution Space

Given a field F, and a system of m equations and n variables,

A solution space is the set of all n-tuple such that it solves all of the equations. Can be either of the three cases

- The system is not solvable Then there are no solutions to the system,

- The system is solvable Then there are either 1 or infinitely many solutions (cannot have any other finite number of solutions other than 1, think about lines intersecting)

Back Substitution

A method of solving a system of equations where the equations are shaped like a triangle, with no 0’s on the diagonals!

Example

, OR WE GET DIVISION BY ZERO!

We assume that

Unique Solution

Unique solution

Infinitely Many Solutions

Note, this system has Infinitely many solutions! Hence, we can form a solution space , where

Symbolic representation of systems of linear equations

We can represent any system in the following way :

Lecture 2 : E.R.O and Equivalent Systems

Elementary Row Operations (ERO)

Operations that can be done on any system with the following properties

- Scalar Multiplication : : multiply the ith equation by a, where

- Addition : replace the jth equation with jth equation + a ith equation,

- Permutation : : Swap ith and jth equation

Row equivalent

We call 2 systems row equivalent if one is obtained from another by a sequence of elementary row operations

Theorem 1.1 Equivalent Systems of Equations

Two systems, and are equivalent they have the same solution space

Theorem 1.2 : Row Equivalence Equivalent Systems

Proof and that are tow equivalent, then

- produces equivalent systems Suppose we have two systems, and that have the same solution with the following condition : Apply the operation on the system, then

You must prove it for each individual operation

Corollary 1.3 : Homogenous row Equivalent Systems and be two two row equivalent matrices, then they exactly have the same solution!

Example

Theorem 1.2 Solutions to Equivalent homogenous systems

Row-Reduced Echelon Form (RREF)

A system of linear equations is in RREF if it follows the follow requirements

- The first non-zero entry in each row is equal to 1

- Each column of the system contaisn the leading non-zro entry for some row has all its other entries 0

Lecture 3 :

- Added Example to RREF example

Week 2

Lecture 4 :

Week 8

Lecture 20

Theorem :

Theorem

Given a basis ,a linear map

is the same thing as an n-tuple of vectors Namely

- Given , define an n-tuple as :

- Conversely, given , define by declaring that

- This allows us to uniquely define a linear transformation such that

To be even more explicit, we can define W with a basis of

Examples

- Polynomials of fixed degrees : Describing their Transformations Lets choose a standard basis for to be . Then we could choose a triple . Using Threom 0.0.0., the transformation is defined by the following

Definition :

To denote the matrix of relative to the ordered Bases is

Where number of rows and number of columns is

Note

Or each basis vector as :

Exercises

Rotations on Lets denote the rotations on as the following transformation

and the Standard Basis , then

Therefore, the Matrix for rotations on the cartesian plane is :

We used the following properties(which we will prove later!) :

Lets denote the standard basis to each vector space respectively. Then

Write the matrix for the following transformation

Lecture 21

- Write somewhere

- Let with the standard basis. Then we can express we can express this in terms of coordinate vectors such that

- Here is a better example : Let be the basis, hence we can express polynomials which gives us bijections between vector spaces!

Lemma : Coordinate Vectors in Linear Transformations

If , then

Proof

Remark : This is basically multiplying a matrix with a Vector! , a vector , then we produce a vector , where the ith component is

Lets examine a matrix

The Motivation? MATRIX MULTIPICATION

Theorem : Matrix Multiplication

Let be vector spaces with bases , and a be a linear map, then

If we define , then

Digression : The Algebra of Linear Transformations

Groups, Rings, Fields, VectorSpaces

(Prop) Fun is a F.V.S. (The Soul Stone of Linear Algebra!)

Consider a set and a F.V.S. , then all possible functions from (denoted or in set theory ) is a F.V.S

Proof and an arbitrary element then we have to show that it is an abelian group under addition that respects scalar multiplication!

- Abelian Group under Addition

- Respects scalar multiplication

Since is an arbitrary scalar, and , then Therefore, it follows all of the vector space axioms, hence is a vector space!

Why isn't an F.V.S.

Why is this the so powerful

Think about endgame, the soul stone is able to take any soul and put it into any other soul, in this case, we can take the soul of the set and transform it into an FVS(Given that its being mapped into an FVS)! This powerful took is the backbone to why matrices work!

Homomorphisms and using the Soul Stone

- While the soul stone is quite unstable, we can make it more stable through homomorphism!

Homomorphism :

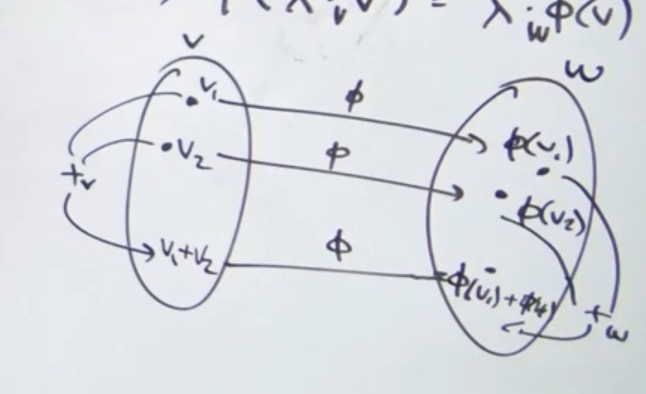

Homomorphism is a function in mathematics that preserves the structure between two algebraic structures. In our case, if we want to go between two FVS, say V and W, we want to define a vector space homomorphism over the same field to preserve the vector space properties!

Supose was an FVS, we will call it V, what do we get out of it?

- From the prop, we can easily examine that is a vector space

- These mappings are also Vector Space Homomorphisms over the same field F

(prop) Linearity is a Vector Space Homomorphism

For any vector spaces over the same field , a function such that , is a vector homomorphism( samething as saying that is linear) if for any :

Visual :

Side note on linearity/linear maps

- Linear Algebra is an old subject, with its strongest roots dating back to the 19th century. Therefore a lot of Jargon has stuck with it until the 21st century. Using the concept of linearity(19th century Jargon) is the same ideas as a Vector Space Homomorphism over the same field(Contemporary Jargon), however notice how cacophonous this is, so linear is just nice to use

- Note : This specific construction preserves the vector space structure, meaning that it satisfies all 10 axioms of a vector space!

- For the rest of the notes, I will also omit the sub and super scripts if we know before hand that its a the Homomorphism satisfies linearity (Also known as a Linear Transformation)

- We will transition to and to denote Linear Transformations between two vector spaces -Visual : > [!note] Linear maps:

Proof and . Then for any two vectors

The space of all linear maps

(prop) is a Vector Space

Lets consider the subset such that the functions are linear. then is a FVS

Proof (Simple) :D is a vectorspace, we must show it's a subspace. and we can show this through linearity!

- Linearity proves closure under addition

Let define addition, for any vector We will also denote this short-hand (T + S) is linear

Suppose we pick two vectors and , then

- Linearity proves closure under addition Let define addition, for any vector We also represent this operation as is linear

Suppose we pick vectors and scalars

Notation , as it provides more detail, is 19th century notation

I will denote

Function Compositions over Vector Spaces

Consider 3 vector spaces, and 2 functions and . We can apply the same Composition rules to vector spaces as it can be composed as

This naturally gives us the following map

Which can be restricted even further to :

[!note] Linearity and Bilinearity

Compositions of Linear Maps are linear!

Proof , then j This guarantees us the fact that [!warning] This implies Bi-linearity! Def : Bilinearity : Linear with respect to each of the two arguments Claim : is Bi-linear [!check]- Proof

Remark : This bilinearity of rings be a ring with two binary operations, then the distribution laws mean that multiplication is bilinear

There is some connection between the two!

Week 9

Lecture 22

- Definition of Bilineatiy

- Proved the Bilinearity

- Showed Bilinearity in rings

Associativity of composition

Let be sets, and lets define the following functions , then :

Proof

Remark , might not make sense. Take but note that

Arbitrary composition of functions do not allow it to form a ring since given any

The Algebra of Endomorphisms!

We denote the set of Endomorphisms , so

forms a Ring under and

We can define addition and multiplication in the following ways: We can define two special elements as well (I will note that the zero function is often denoted by the zero element in the ring, but I want to introduce this new notation for even more clarity. It follows a similar style to the Identity) Collecting all of these items, we can form into a ring! In particular, we can see that the distributes over . Take note that is not abelian as for some particular and

Exercise Orthogonal Reflections

Lets examine . One example of would be the orthogonal reflections on the cartesian plane. For example, consider a line on the plane. We can consider the following transformation.

Hence using geometry, we can define one of the basis vectors as a rotation of around the origin, hence:

Hence using geometry, we can define one of the basis vectors as a rotation of around the origin, hence:

Giving us the following matrix for orthogonal reflections over any line

Method 2 : Curtesy of Stack exchange (Requires Matrix multiplication which will be proven later) Lets denote the Reflection over the any line that is angle away from the x-axis to be . If we consider reflections over the x-axis () we get the following matrix

Now instead of considering rotations on a vector basis, suppose we rotate the line instead. Lets consider rotations by around the orgin. First we rotate our line and vector back towards the origin, then reflect along the x-axis, then rotate it back to our original angle!

Exercise : Composition of Reflections

Now Suppose we have two lines and consider that the angle from to is , then consider two vectors on each line, that lie on and respectively. Then

(by the same construction)

(2, 5, -3) = 2 + 5x -3x^2 = 2(1+x) + 5(1+x+x^2) -3(1+x+x^4) = 4 + 4x + 5x^2 -3x

sin((1, 0))

→ sin(-x) = -sin(x) → cos(-x) = cos(x) →cos(x - pi/2) = -sin(x)*sin(-pi/2) = sin(x) sin(x - pi/2) = cos(x)sin(-pi/2) = -cos(2x) →sin(x+pi/2) = cos(x) → cos(x + pi/2) = -sin(x) cos(-2\alpha + pi/2) = -sin(-2\alpha) = sin(2\alpha) sin(-2\alpha + pi/2) = cos(-2\alpha) = cos(2\alpha) goal = (sin(2x), -cos(2x))

its a clockwise rotation around (-pi/2 + 2\alpha)

pi/2 - alpha

is alpha is 30

then beta is 60

so the rotation would end up in being in -30

-30 = -90 + 2 * 30

Lecture 23

Matrix Multiplication

Matrix Multiplication Operation be an matrix and be an matrix, then where

Let

Matrix Multiplication Representation in terms of Linear Transformations with bases respectively with 2 linear transpositions, and , then

Suppose we have 3 vector spaces over a field F,

Proof

Rotations on Lets define the following function (Or more sophistically linear transformation) on the coordinate plane() as the following. Take any point, (x, y). We can define a rotation around the origin by an angle in the following way, where is the standard Basis Here is an example of this working, take the point (1, 1) for example and we want to rotate it by around the origin, we can think of it as just a standard "matrix multiplication"

R_{90 \degree}((1, 1)) = \begin{bmatrix} \cos(90 \degree) & -\sin(90 \degree)\\ \sin(90 \degree) & \cos(90 \degree) \end{bmatrix}\begin{bmatrix} 1 \\ 1 \end{bmatrix} = \begin{bmatrix} 0 & -1\\ 1 & 0 \end{bmatrix}\begin{bmatrix} 1 \\ 1 \end{bmatrix} = \begin{bmatrix} 0\cdot1 +0 \cdot-1 \\ -1\cdot1 + 0\cdot0 \end{bmatrix} = \begin{bmatrix} -1 \\ 1 \end{bmatrix}$$ Here is a visual of what we just did! ![[Pasted image 20240311114856.png|300]] # Big Proof Concept (Sum of Angles Formula!) - Rotation $a$ + Rotation $b$ = Rotation $a + b$, Hence you can conceptualize composition of these items as adding the rotation between the two angels. You apply rotation by angle $a$ 1st, then by angle $b$ second. This gives us that: $$R_{a} \circ R_{b} = R_{a + b} = R_{b + a}$$ This naturally gives us the following identity: $$[R_{a+b}]_B^B = [R_{a}]_B^B[R_b]_B^B$$ Giving us this beautiful result that $$[R_{a} \circ R_{b}]_B^B = [R_{a}]_B^B[R_b]_B^B$$ What does this tell us? It gives us a quick way to compute cos(a + b) and sin(a + b) as $$ \begin{aligned} \begin{bmatrix} \cos(a + b) & -\sin(a + b)\\ \sin(a + b) & \cos(a + b) \end{bmatrix} = \begin{bmatrix} \cos(a) & -\sin(a)\\ \sin(a) & \cos(a) \end{bmatrix} \begin{bmatrix} \cos(b) & -\sin(b)\\ \sin(b) & \cos(b) \end{bmatrix} \end{aligned} $$ Using the matrix multiplication techniques, we can easily see that $$ \begin{aligned} \cos(a + b) &= \cos(a)\cos(b) -\sin(a)\sin(b)\\ \sin(a + b) &= \sin(a)\cos(b) + \cos(a)\sin(b) \end{aligned} $$ # Extending this phenomenon Further $$\begin{aligned} \cos(2a) &= \cos(a)\cos(a) -\sin(a)\sin(a) = \cos^2(a) - \sin^2(a)\\ \sin(2a) &= \sin(a)\cos(a) - \cos(a)\sin(a) =2\sin(a)\cos(a) \end{aligned}$$ $$[R_{a + b + c}]_B^B = [R_a \circ R_b \circ R_c]_B^B = [R_{a}]_B^B[R_b]_B^B[R_{c}]_B^B$$\begin{aligned} [R_a]_B^B[R_b]_B^B[R_c]_B^B &= \begin{bmatrix} \cos(a) & -\sin(a)\ \sin(a) & \cos(a) \end{bmatrix}\begin{bmatrix} \cos(a) & -\sin(a)\ \sin(a) & \cos(a) \end{bmatrix}\begin{bmatrix} \cos(a) & -\sin(a)\ \sin(a) & \cos(a) \end{bmatrix}\ &=\begin{bmatrix} \cos(a) & -\sin(a)\ \sin(a) & \cos(a) \end{bmatrix}\begin{bmatrix} \cos(a)\cos(b) -\sin(a)\sin(b) & -\sin(a)\cos(b) + \cos(a)\sin(b)\ \sin(a)\cos(b) - \cos(a)\sin(b) & \cos(a)\cos(b) -\sin(a)\sin(b) \end{bmatrix} \end{aligned}

Commutative Diagrams

Lecture 24

Change Of Coordinates Formula

Suppose V is a Vector Space over F and we are given two bases

Given any vector , there naturally exists two coordinate vectors

These two coordinate vectors give natural rise to an identity transformation: Combining our previous ideas,

(Remember that !)

Commutative Diagram

Remark you find the coordinate vectors that is a solution to :

This is an easy computation as its all a system of linear equations. To find

Change of a Matrix of a linear map

Suppose and be two bases. From the precious theorem, then Characterized by Now choose another pair of bases, , which will give rise to :

How are and related?

Commutative diagram . This is what we get in general!

Insert Second COmm diagram

Change of basis Matrix

Let V be a vector space. If this set exists such that makes it a basis, then the matrix is called the change of basis matrix This is equivalent to saying to saying if is a basis, then Therefore, in order to changes bases, we need an n-tuple of vectors, meaning we need an matrix

Recall that Fact:

How do we find

Suppose we are given an old basis and a new basis such that , hence . Find either for some or for all

Solution is fixed, then its coordinates are a solution to Which means that You can notice that we get the following solution! Now suppose that is arbitrary, there are 2 equivalent answers, then with cant be specified. There are two ways to tackle this problem!

- This is where you need Indeed, as In order to find , we just solve an n system , where 1 is located in the jth component Hence, we just solve an augmented matrix p_{11} & ... & p_{1n} &1 & & 0\\ & \ddots & & & \ddots & \\ p_{n1} & & p_{nn} & 0 & & 1 \end{array}\right]$$ Solving will give us : $$\left[\begin{array}{c c c|c c c} 1 & & 0 & & & \\ & \ddots & & & * & \\ 0 & & 1 & & & \end{array}\right]$$ Where the $*$ is $P^{-1} = [Id]^B_{B'}$

Week 10

God damn this theory is fucked and all over the place

- Prove that has a basis of n elements where half of them are left inverses of the dual of V and half of them are the right inverses of the dual V

- maybe show that it is a direct product?

Lecture 25 : The Dual Vector Space or Matrix Transposition

Matrix Transpose

Given , we define its transpose

Example

\Cmatrix{}{1&2&3\\4&5&6}^\top = \Cmatrix{}{1&4\\2&5\\3&6}

What is the meaning of ?

Linear Functionals and Dual Spaces

Let be a vector space over , then its dual is defined to be

We call elements in linear functionals as a function Note : Dual Spaces are also denoted as but i don’t like that notation. Also the start is often reserved for the adjoint!

, then a function from can be defined as

The space of integrable functions over a closed domain! take a polynomial

(Cor 1) forms a vector space

Proof is a vector space, and is a vector space, then forms a vector space!

Using Prop (Lecture 22), since

(Cor 2) Basis of (Dual Basis) Let be a basis of V, then we can define the dual basis as a list of to be functions such that Then form a basis for Its all part of the Theory of Functions in Linear Algebra!

Standard basis of Let be the standard basis of , then is the dual basis for the standard basis of

Before looking at the proof, I recommend examining the next theorem to build intuition first!

Proof , then Therefore, Hence ever linear functional is just

Pick a

(Cor 3) Every Linear Functional has a left! (The anti-Dual Space ) Let be a linear functional.

- is injective:

Suppose , then . Then (since every element in a field has a multiplicative inverse)! By definition, we know that , meaning that the only element that maps to the additive inverse in is the 0 in , therefore the kernal is trivial or

- is surjective:

Pick an , , therefore

Therefore, every linear functional is invertible!. lets define its inverse as where and , given a dual space with a dual basis : , we have an ati-dual with an anti-dual basis : ,

(Cor 4) Basis of Suppose are bases. Using the props from before, we know that Since both of these are vector spaces, we know the dimension will be by a bunch of theorems we did earlier(and later). hence we have basis of dimensions and basis will conprise of elements :

(Thm) Dimension of

Extended Proof and , then we can find an n-tuple such that . So this mapping looks like this

- is linear Let and

- is invertible Injective. is the only function that maps and is also the zero in the vector space of Surjective : Let be the basis of and be the basis of , then using lecture 20, we can define and using the fact that is linear, then clearly the

- Since T is invertible, . We have proved that is an isomorphism!, hence meaning that .

- This proof is fascinating as it illustrates what the linear transformation does under the hood. Let Then

Proof Note: We really haven't built enough machinery to prove the complex components of this, plus its really uninspiring

- Added Fact : Groups, Rings, Fields, VectorSpaces that : Every field is a V.S., but not all V.S. are fields!

THE BEUAUTY OF THE DUAL

Given any linear transformation and their respective duals , the linear transformation is the composition pictured below!

(Lem) Matrices, Transpose, and Duals Oh My

Given a linear map, , it defines the map such that

- Note : Composition of functions is a bi-linear operation : F is linear and G is linear, therefore, is Linear

What is matrix

Suppose we have fixed bases and , hence , then the dual bases, and Recall that We obtain that , hence these matrices are just transposes of each other!

(Lem) Linear maps and their transpose

If , then

Proof BY the definition of a dual basis: Hence:

By definition

Lecture 26: Matrix Transpose Properties

(Prop)

Why the swap? Gives rise to They are the same map! so

Proof

The structure of a linear map

(Rank-Nullity Theorem)

Goal : Given a linear map , try to understand what it really does

19th century Interpretation

Given a linear map, , Let bet he change of bases matrices, w.r.t. A, then what is

Question:

- Find matrices P, Q, such that is as nice as possible

(Thm) Change of Basis Matrix

Given finite dimensional vector spaces, and a transformation , then there exists bases, such that , where

- Note : These bases are not unique, but any pair of such bases give the same matrix!

Week 12

- QUstion: Why does he reoder the matrix determinant differently. I am used to the notion of the matrix being ?

- FIX THIS HORENDOUS SHIT NOTATION WHEN ADDING MORE VECTOR SPACES

- “Mathematics is about thinking about theory, not so much about memorizing computation”

- “Some times mistakes happen, and saying sorry is okay. But sometimes its about how you fix the problem” - Pizza Guy

- Finish the proof for the direct sums

Lecture 31 : 4/1 : Bi-Linear Functionals!

Matrix? What is is? And what functions can be made using this?

Motivating example, Lets define the matrix: \Cmatrix{}{a_{11} & a_{12} \\ a_{21} & a_{22}} \in M_{2 \times 2}(F) Define This definition is a function! Note: This function is a polynomial in 4 variables!

Is this function Linear? , then can be considered linear. This is what is known as a bi-linear function!

NO: IT IS QUADRATIC, but it can be linear. Suppose we fix variables

End Goal : Construction of the determinant of the matrix!

Trick: Realize that the deteminant, up to proportionality, is just n-linear anti-symmetric functions that maps

Bilinear Functions!

https://kconrad.math.uconn.edu/blurbs/linmultialg/bilinearform.pdf This who approach is just taking another idea we already discovered! Given Vector spaces with bases , then the linear map is the same as the set of n-tuples such that Another example: has basis with dual WE COME BACK TO THE DELTA FUNCTIONS!

AN even further generalization

Bilinear Functionals

Let be vector spaces, and define a map . We say is BiLinear if linear respective to each argument when another is fixed ie. If is BiLinear, then we can express it as one summation : One take away is recall how distribution works, so the order of i and j can be different! 3

Distribution on Rings is a ring!, then the two distribution laws show that is bilinear!

If

A note on certain linear functionals:

The linear functionals we are going to examine in this next section will involve examining vectorspaces of the same dimension : n, so we will compress the notation even further below :

The set of all Bilinear Functionals Other sources may use

- is a FVS (Check lecture 18) Examine that is a vectorspace, then done!

What is the basis of

We know the basis of to be Lets denote the bases and dual bases as followed, : We can then determine a pair of bi-linear maps by the following: This function is quadratic!, namely : B(v_1, v_2) = \sum_{i, j} a_{1i}b_{2j} \sum_{k,l} B(v_{1i}, v_{2j})v^{\vee}_{1i}(v_{1k})v^{\vee}_{2j}(v_{2l})$$$$ = \sum_{i,j} B(v_{1i}, v_{2j})\overbrace{v^{\vee}_{1i}(v_{1k})}^{\delta_{ik}}\overbrace{v^{\vee}_{2j}(v_{2l})}^{\delta_{jl}} = B(v_{1k}, v_{2l}) 2) The monomial : is bilinear

- fix then is linear. Or fix , then is linear

- Therefore, spans

(Lem) are all linearly independent!

Proof , then , Therefore, all the L.D. relation is trivial!

Suppose

Therefore, the basis of consists of elements of the form : for all combinations of i and j

We know that hence we can define the usual

Consider the following Vector Space :

Cartesian Product of Vector Spaces

- Examples and Proof Provided by Axlers Book

Example Note the following:

Consider the following vector spaces

Given F.V.S.'s , then forms a F.V.S. Lets consider what this looks like as a set: Lets define the following operations :

Proof and scalars

- Closure under addition:

- Associativity

- Commutativity

- Identity

- Inverse

Pick any vector v, then

- Scalar Multiplication Closure

- Distribution Properties

(prop)

Proof denote the basis of vectorspace , where it contains vectors. Lets also denote to be the additive identity of vector space Claim : the basis of of can be split up based on the dimension of each vector space ex) cartesian product of 2 vector spaces:

(Lem 1) : Given a F.V.S. V, its additive identity, forms a subspace

Proof

- Closure under addition:

- Scalar multiplication:

- Additive Identity Exists:

Trivial by construction

Since its a subspace, and by prop…471-addbacklink, then is also a vectorspace!

(Lem 2) Given F.V.S. V, W, and are vector spaces with the same dimension as V

Proof is an isomorphism

By construction of the homomorphism, that is the only element that maps to 0.

The proof for the other direction is entirely the same,

Now, for every vector space , construct vector spaces of the same size by using the following cartesian product : be a vector space called . Claim:

- Trivial

- Trivial Therefore,

Fact

In an additive category, such as abelian groups and vector spaces over a field F, the cartesian product and the direct-sum are the same thing! https://math.stackexchange.com/questions/39895/the-direct-sum-oplus-versus-the-cartesian-product-times https://en.wikipedia.org/wiki/Biproduct https://en.wikipedia.org/wiki/Additive_category Using this fact, we will use the direct sum to denote cartesian products, just because it looks cleaner:

Clearing up the confusion

- The latter is just better notation

- The former is all linear maps, the latter is all bi-linear maps!

Tensor Product

Notation and Definition dump

We say something is linear when We say something is bi-linear when and We say something is tri-linear when We say something is ,multi-linear/Poly-Linear/N-Linear if

- Definition adapted from : https://www.math.lsu.edu/~lawson/Chapter9.pdf

BEST SLOGAN : “Tensor products of vector spaces are to Cartesian products of sets as direct sums of vectors spaces are to disjoint unions of sets.”\

Goated Sources:

- https://www.math.brown.edu/reschwar/M153/tensor.pdf

- https://people.math.harvard.edu/~elkies/M55a.10/tensor.pdf

- https://www.cin.ufpe.br/~jrsl/Books/Linear%20Algebra%20Done%20Right%20-%20Sheldon%20Axler.pdf

BIG BOY TIME

Lecture 32: 4/5 :Multi-Linear Functionals

The connection between group theory and Linear Algebra!

In order to truly appreciate the beauty ok multi-linear functionals, we must explore Group theory, and it is the study of group theory which introduces the building blocks of symmetry! Review : 410 Branch

Fancy Bilinear Transfomation Definition

Recall Using group theory, we can more concisely write this span in the following way!

MultiLinear Functional

Which describe all n-linear functions from , then Lets look at let Hence the basis for will be denoted as The dual basis :

By definition, N linear functionals is the same thing as

The det operation is Anti-symmetric

Anti-symmetry: a relation is antisymmetric if The determinant is anti-symmetric! What we basically did was swap

Concrete Example :

Week 13

Lecture 33: 4/8 : Decomposition of Bi-Linear Maps and Intro to K-Linear maps

(Lem)

Suppose we consider two linear maps such that (\Lambda B) (v_1, v_2) = \frac{1}{2}(B(v_1, v_2) + B(v_2, v_1))$$$$(\Lambda'B) (v_1, v_2) = \frac{1}{2}(B(v_1, v_2) - B(v_2, v_1)) These are known as Symmetrizers

(Lem) and

Proof 1) and give a basis for

Suppose that we pick a . Observe that hence

Suppose , then hence

Therefore,

Proof 2) Symmetrizers are projections is a projection of along L^{asym}(V^{\times2}; F)$$\Lambda' is a projection of along Find their Kernal and Images

Generalization : K-linear maps

Suppose we consider the following vector space :

-

,

- (Note, these are the same vector space, but with different bases)

-

Bases :

-

We will use this shorthand

Special Maps

Symmetric Maps , is symmetric if:

Let

Anti-Symmetric Maps (or Skew-Symmetric) , is Anti-Symmetric if:

Let

Alternating maps , is Alternating if:

Let

Lecture 34 : 4/10 Groups acting on Vector Spaces

More Defintions

Why do we need groups?

Because we can use groups to act on sets, which is helpful in counting the number of elements in a basis! A groups purpose in life is to act on things

Useful Properties :

- acts on ex) Let and

Prove defines a group action

A try :

F is antisymmetric if

- Problem! Too many permutations!

Another try!

More problematic than appears

look :

!! It works out!!!

“swap of changing letters can be done in different ways!”

Perform different out of a simple permutation

Perm = the parity of the number of the same is the same

Perm = different computations of a simple permutation

Consider a such that

Now suppose we consider acting on this using

remember one important fact about the symmetric group, their inverses!

In order for our equations to match up, we need to consider the following :

in order for the two to be equal, we must actually permute the vertices of the functionals

Now define the antisymmetric group (or alternating, however this terminology is outdated)

number of choices : n choose k

dim

Question :

Suppose we examine a finite field of 2 elements and we form a vector space of dimension 2 :

Examine the following :

Basis:

Lecture : 4/12

- Fix :

- =

because it is symmetric

k=2

Each

is symmetric!

proof :

Want :

NOTES : remember, the is an abelian group as it is isomorphic to

Therefore, the span is L.I., therefore it forms a basis!

Question : What are the dimensions!

Span =

combinations of combinatorions with repitions of k for out of dim V =

Segway into the Determinant!

Focus on

Let is determined up to a particular scalar is determined by

is that functional whose value , where is the famous epsilon function

Conventions :

look at the formulas on the right!

is a vector, is a functional denotes the 1st basis vector in v, denote the first linear functional of v, This is near as or which looks exactly like the dot product in a sinple way :

Week 14

Lec 36: 4/15

Determinant Properties!

:

Properties :

- det(A) Invertible

Cancellation Law of Group Theory!

Fact :

Let

E.R.O. V.S. Det(A)

if two rows are the same, in most fields except characteristic 2

Properties :

- Row swap :

-

- Why :

- This expression is nonzero

upper right triangle :

(4 2 1 1) ←> (3 1 1 1)

Determinants as expansions by minors of a row/a column

Recall, its linear in respect to the column!

A linear function :

or

question : What is the coeeficient?!

what are there existion marks

Adjoint of a matrix :

4 equations

Property!

- exists

- If A is a 0 divisor!

Consider or a field on 2 elements

number o functions : unique functions

Claim!

In all characteristics, In not characteristic 2, In Characteristic 2, In characteristic not 2,

Question 1) What is the total number of linear functions from

- , hence the dimension of all linear functions from is 2

- Claim 1: Every Symmetric Matrix is Antisymmetric

Number of symmetric : Only in characteristic not 2

Case 1 k = 1:

case 2 : k = 2:

case 3 : k = 3?><<dfsssss;‘x

In characteristic 2 :

for bilinear maps, consider the following :

functionals on their own are not bi-linear, so we have to multiply!

consider the following for a V.S. of dimension 3

is not bilinear, however, is not bilinear. just doesn’t make sense! So, the basis of consist of 9 elements, choosing 1 from the left and 1 from the right

Question : what linear combination of

Observation: Consider a vector v with elements , where each element is non-zero. then

But notice something : , so inorder to find its counterpart , we should also consider that is also a problem as

Lemma 1) : Proof : Therefore, we can construct this monomial such that

Note: If , then our linear combination is not linearly independent as , therefore will always be 0 Conclusion, we just permute both i and j!, we need cycle that must be length 2: Lets consider (as our vector space is 3 dimensional). and let , if we consider all options, of , we get the following :

e : (12) : (23) : (13) : : (132) :

From this example, there is only permutation in which granted use the element : (funily enough, its the ) Lemma 2)

The big thing we must consider is that while the vectorspaces have the same dimension, they might have different bases and thus different functionals!

Hence, we have witnessed that ! Its just a permutation of the indices!

Now consider the following :

ITS JUST acting on a set of n numbers, in our case, its

acting on

https://math.stackexchange.com/questions/242812/s-n-acting-transitively-on-1-2-dots-n

acting on =

acting on is

acting on is

acting on should have

- https://math.stackexchange.com/questions/3210062/whats-the-difference-between-the-following-nk-1-choose-k-nk-1-choosek

- https://math.stackexchange.com/questions/4716950/combinations-with-repetition-nr-1-choose-r-1-nr-1-choose-r?rq=1

Consider a vector space of dimension n over a field F. Let denote all k-linear maps from . Prove the dimension of or all symmetric k-linear maps is

Known Facts :

Lec 38: 4/18

The Jordan Normal Form of a Linear Map (Ch 6, 7)

Problem : Given a space V,

Goal : Classify Linear maps

Produce a small (Finite) list of maps such that any is equivalent to exactly 1 on the list

ex) We’ve seen an example

, such that

Classification of such that

such that must T such that Want to quit , any P, Q must

Marketing the problem precise :

Problem: CLassify matrices up to similarity, i.e. is equvalent to if

Ex)

If allowed, is equivalent to , where

Is that true

In general :

When

Now, if its a square matrix :

recall :

Fundamental result actually uses a neutering of the Jordan Normal Form

Hard problem : Simplification

Given : Q) What does it do? Idea : Find , such that is “Good”

Amazing Idea : “Good” means diagonal such that with a bunch of c_i in between Degrees of freedom are decoupled

Q) Is every matrix diagonizable? I.E. does there exist P for any T such that

Example : Basically symmetric matrix!

What does it mean?

Eigen-Vector

Given , is called an eigen vector if of eigen value c if and

T is digitizable if a basis of eigen vectors

Q) Is there atelast 1 eigenvector?

- Yes Iff has a non-zero Solution

eigenvalue characteristic eigenvector characteristic vector

Characteristic Polynomial

The characteristic polynomial of is

WE WANT EIGEN VALUES, so from now on, is algebraically closed

Let V be a vector space over a field F of dimension n. find the dimension of all k-linear maps :

Suppose n = 5, k = 3

BUT

Lec 39 : 4/24 : Jordan Normal Form of a Matrix

Recall : over F

diagonalizable

Experimental Material :

Not every linear map is diagonalizable :

- T : \Cmatrix{}{c & 1 & 0 & ... \\ 0 & c & 1 & 0 ...\\ \vdots \\ 0 & ... & c & 1 \\ 0 & ... & 0& c}

Ways to solve :

- Note :

\Cmatrix{}{c & 1 & 0 & ... \\ 0 & c & 1 & 0 ...\\ \vdots \\ 0 & ... & c & 1 \\ 0 & ... & 0& c} \iff there is a chain

but

Also :

- Doing a concrete example

\Cmatrix{}{2 & 1 & -1\\ 2 & 2 & -1\\ 2 & 2 & 0} : \C^3 \to C^3

From this, this means its not diagonalizable

eignevectors :

[T]_{\{\alpha_1, \alpha_2, \alpha_3\}} = \Cmatrix{}{1 & 0 & 0\\ 0 & 2 & 1\\ 0 & 0 & 2}

Fact : \ker(I-2Id)^2 = \Cmatrix{}{0 & 1\\ 0 & 0}^2 = \Cmatrix{}{0 & 0\\ 0 & 0}

B: The result :

(Def) Root space

The kernel : is called the root space. It is denoted as : Note :

- , if , then it means that it is diagonalizable!

- , we can also see that

- is a subspace!

(Lem) is a T-Invariant, i.e.

Proof

(Thm)

- For the restriction of to , each j, there is a basis of such that bunch of blocks in the matrix : add photo each block being of the form… (add more photos)

- The collection of sizes of blocks up to order, is independent of the basis!

Question : Are the sizes of the blocks dependent on the dimension of the root space?

Jordan Cell !

\Cmatrix{}{c_j & 1 & 0 & ... \\ 0 & c_j & 1 & 0 ...\\ \vdots \\ 0 & ... & c_j & 1 \\ 0 & ... & 0& c_j}

Suppose T is upper triangular, where the diagonals are denoted by , then determinant is

Now suppose its in the jordan normal form

sizes of all cells with on the diaganols

Cases and examples :

2 cases! \Cmatrix{}{0 & *\\ 0 & *}, \Cmatrix{}{c_1 & 1\\ 0 & c_1}